PRISMATIC

TYPE

Interactive Music Mobile

ROLE

UX Designer & IoT Developer

DURATION

16 Weeks

ORGANIZATION

USC

An interactive music mobile that combines IoT technology with physical computing to create a shared music visualization experience, responding to both ambient sounds and direct user interaction.

The Challenge

In our increasingly digital world, music listening has become a solitary experience, often confined to personal headphones and individual devices. Our team was challenged to create a physical computing project that would transform music from a private experience back into a shared, communal one, while creating visual representations that enhance the emotional connection to sound.

Key questions that guided our design process and the PRISMATIC solution

Project Goals

- Create a physical installation that responds to music in real-time

- Design an experience that encourages shared music enjoyment

- Develop a system that translates audio into meaningful visual patterns

- Build a product that enhances emotional connection to music

- Integrate IoT capabilities for remote interaction and control

Our Solution: PRISMATIC

PRISMATIC is an interactive music mobile that combines physical computing with IoT technology to create a shared music visualization experience. The installation features suspended geometric elements that move, change color, and emit light patterns in response to music, creating a dynamic, immersive environment that enhances the emotional experience of listening.

Key Features

- Audio-Responsive Elements: Geometric shapes that respond to different frequency ranges in music

- Dynamic Lighting: LED arrays that change color and intensity based on music tempo, volume, and emotional tone

- Kinetic Movement: Subtle mechanical movements that correspond to rhythm and beat

- IoT Connectivity: Remote control and music selection via smartphone app

- Ambient Mode: Responsive behavior to environmental sounds when music isn't playing

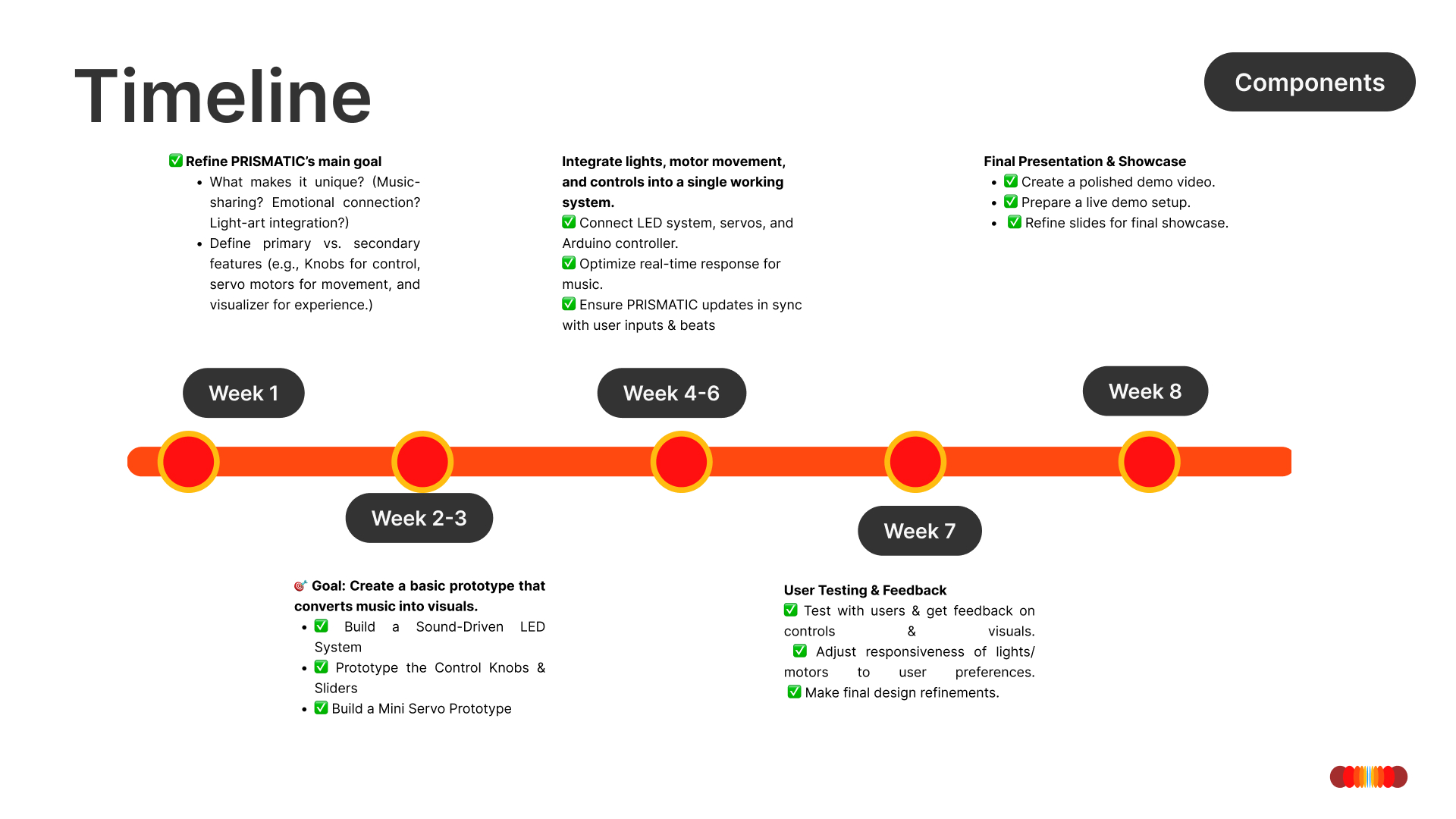

Project Timeline

The PRISMATIC project was developed over a 10-week period, with clear milestones and deliverables at each stage.

Our 10-week development timeline with key milestones and deliverables

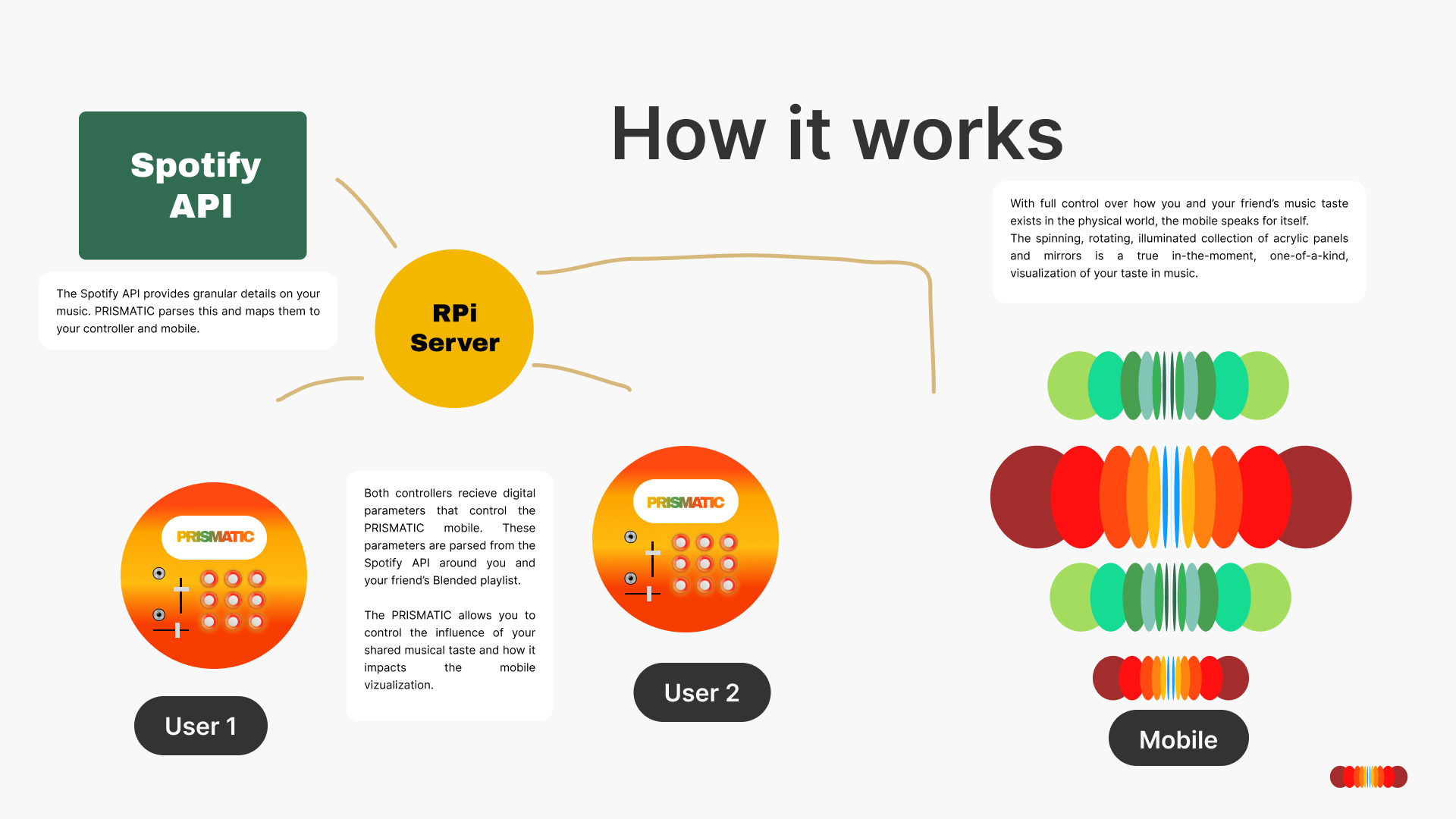

How It Works

PRISMATIC combines hardware and software components to create a seamless, responsive experience. The system processes audio input in real-time, analyzing frequency spectrums, beat detection, and emotional characteristics to drive the visual and kinetic outputs.

System architecture showing how PRISMATIC connects users through music

Hardware Components

- Microcontroller: Arduino Mega for real-time audio processing and control

- IoT Module: ESP32 for wireless connectivity and remote control

- Audio Input: High-quality microphone array for ambient sound detection

- Actuators: Servo motors for kinetic movement of geometric elements

- Lighting: Addressable RGB LED strips and spotlights

- Structure: Lightweight, suspended geometric forms made from translucent materials

Software Systems

- Audio Analysis: Fast Fourier Transform (FFT) for frequency spectrum analysis

- Beat Detection: Algorithm to identify rhythm patterns and tempo

- Emotion Recognition: Machine learning model to classify emotional characteristics of music

- Mobile App: React Native application for remote control and customization

- Cloud Integration: Firebase for user preferences and music history

Physical Design

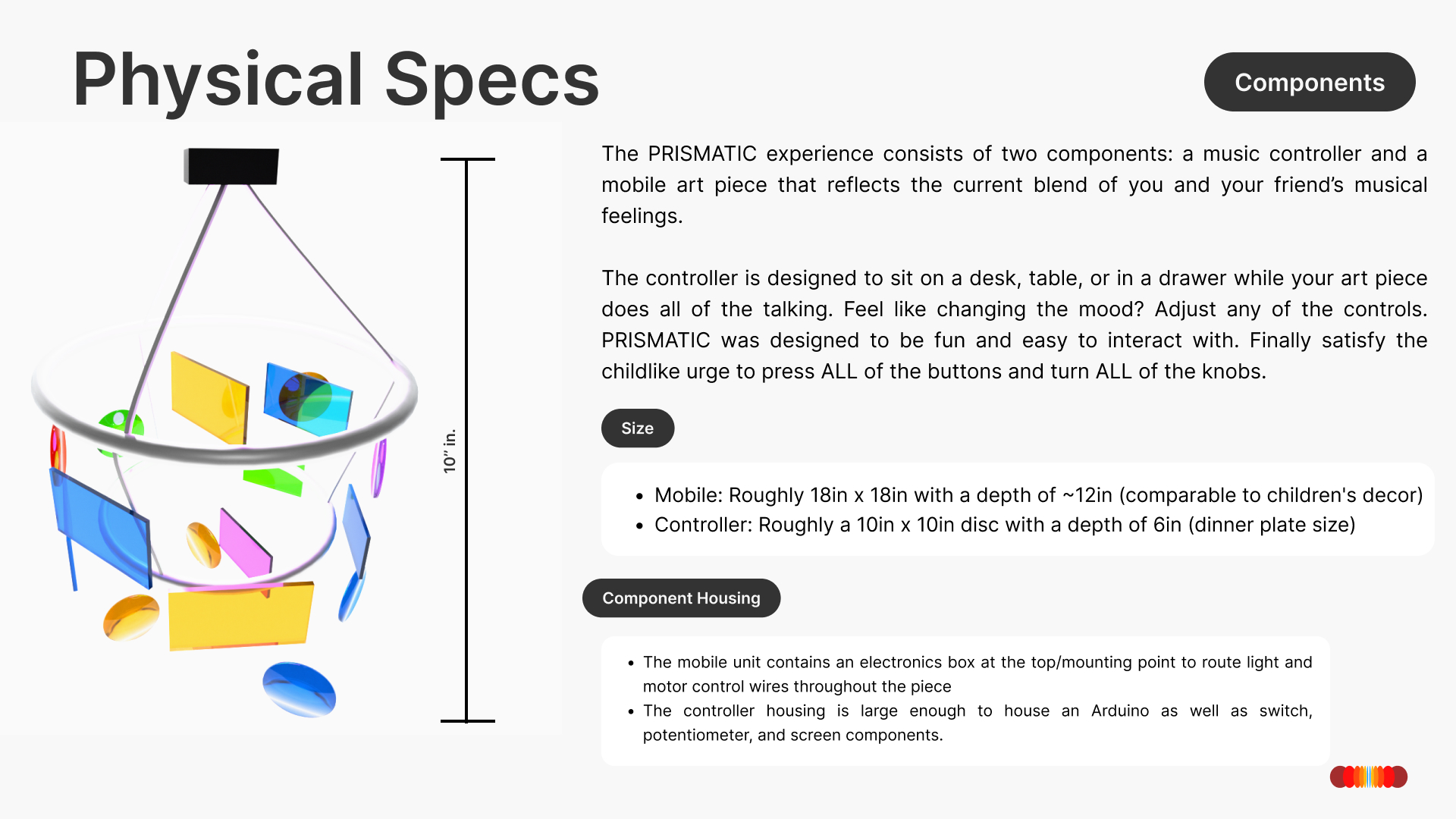

The physical design of PRISMATIC consists of two main components: a controller device and a suspended mobile art piece. Both elements were designed to be visually appealing while providing intuitive interaction.

Physical specifications of the PRISMATIC mobile

Controller design with interactive elements

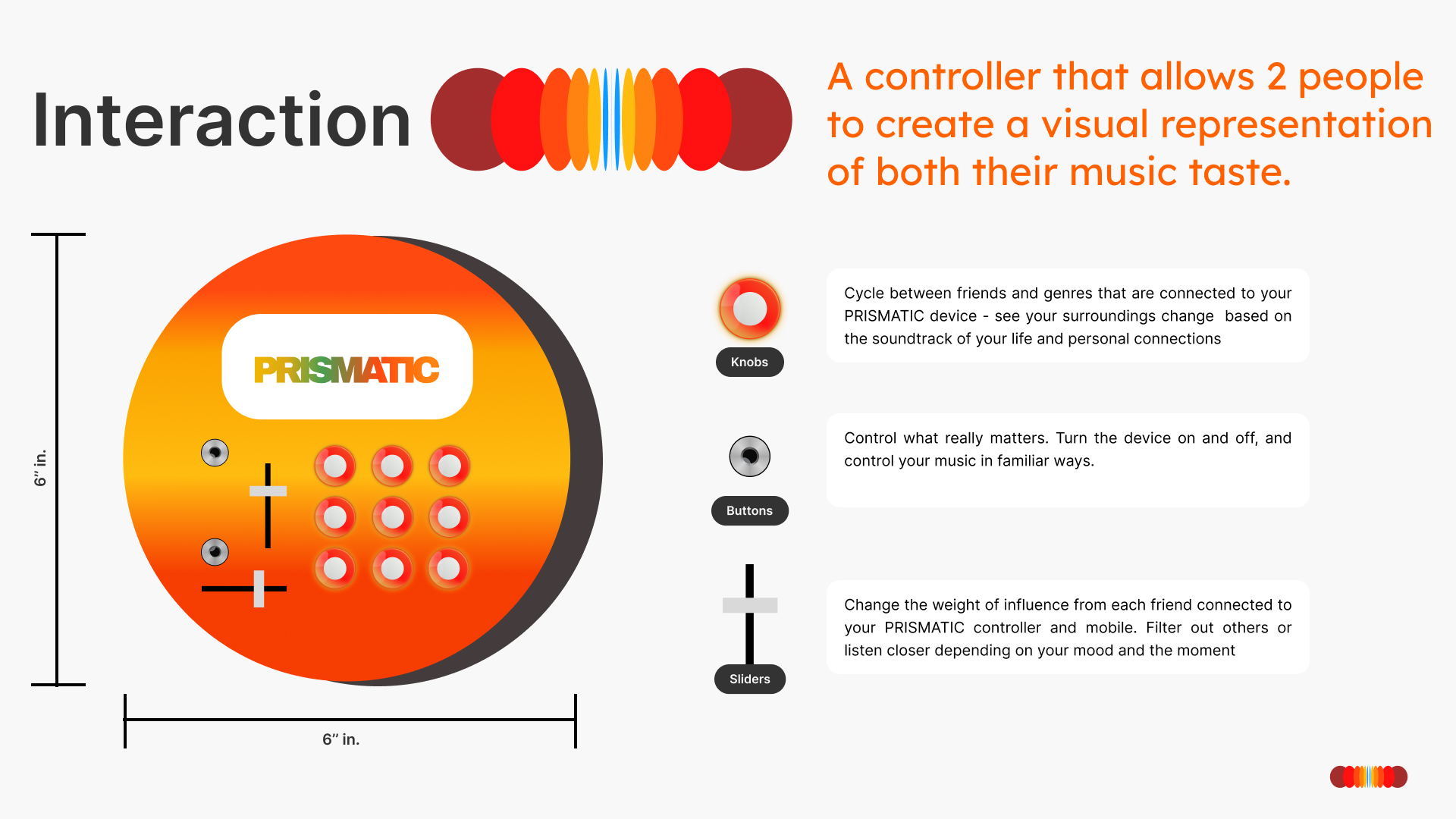

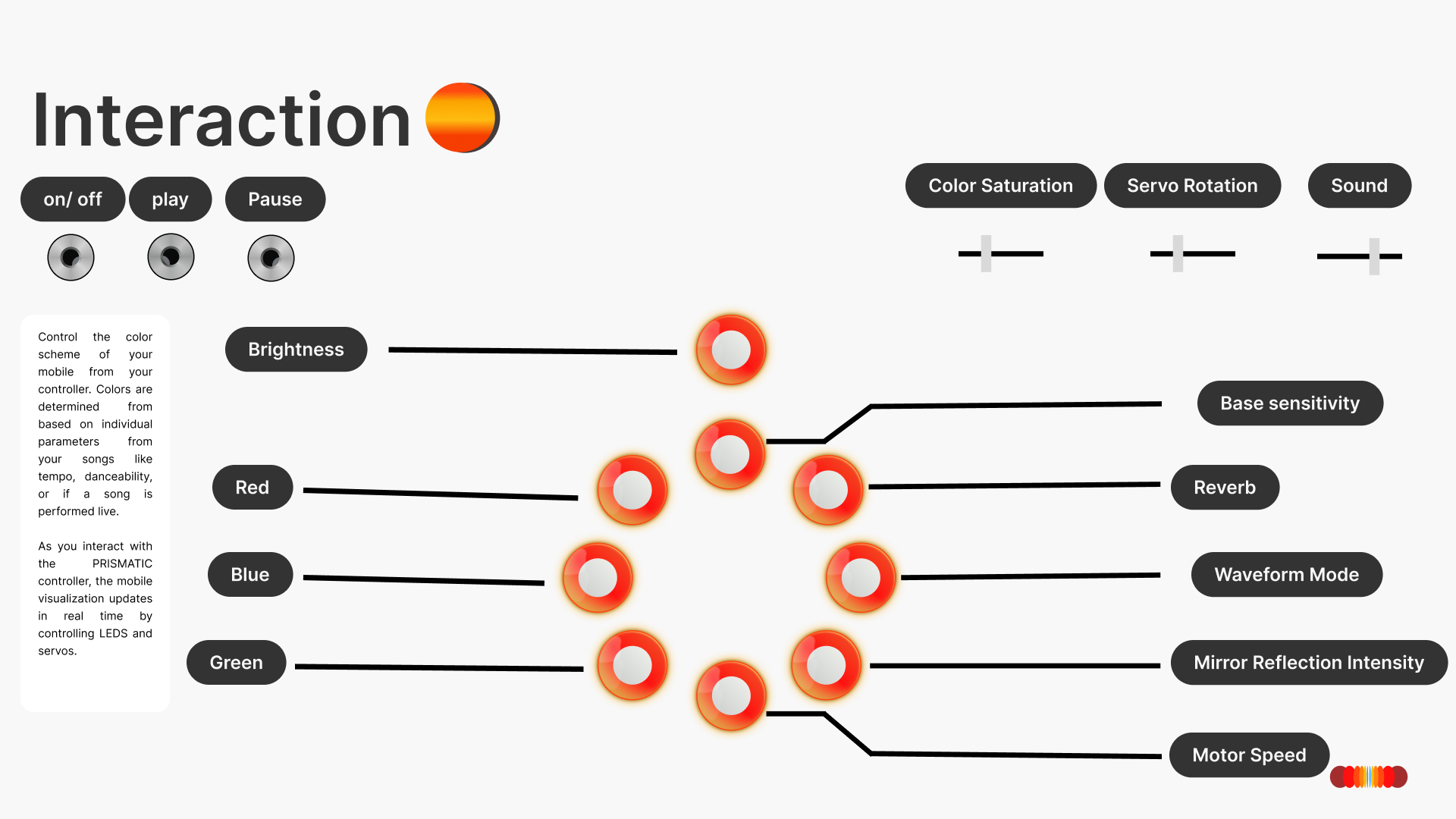

Interaction Design

The interaction design of PRISMATIC focuses on creating an intuitive and engaging experience for users. The controller provides multiple ways to influence the visual and kinetic behavior of the mobile.

Control interface showing various parameters users can adjust

Active Listening Mode

Users can select specific songs through the mobile app, and PRISMATIC will create a custom visual experience tailored to that music. The system analyzes the emotional qualities of the song and creates corresponding color schemes and movement patterns.

Ambient Response Mode

When no specific music is playing, PRISMATIC responds to ambient sounds in the environment—voices, footsteps, or background music—creating subtle visual changes that make the space feel alive and responsive.

Interactive Mode

Users can directly influence the installation through the controller, adjusting color themes, sensitivity, and movement patterns. This creates a collaborative experience where multiple people can contribute to the visual composition.

Technical Implementation

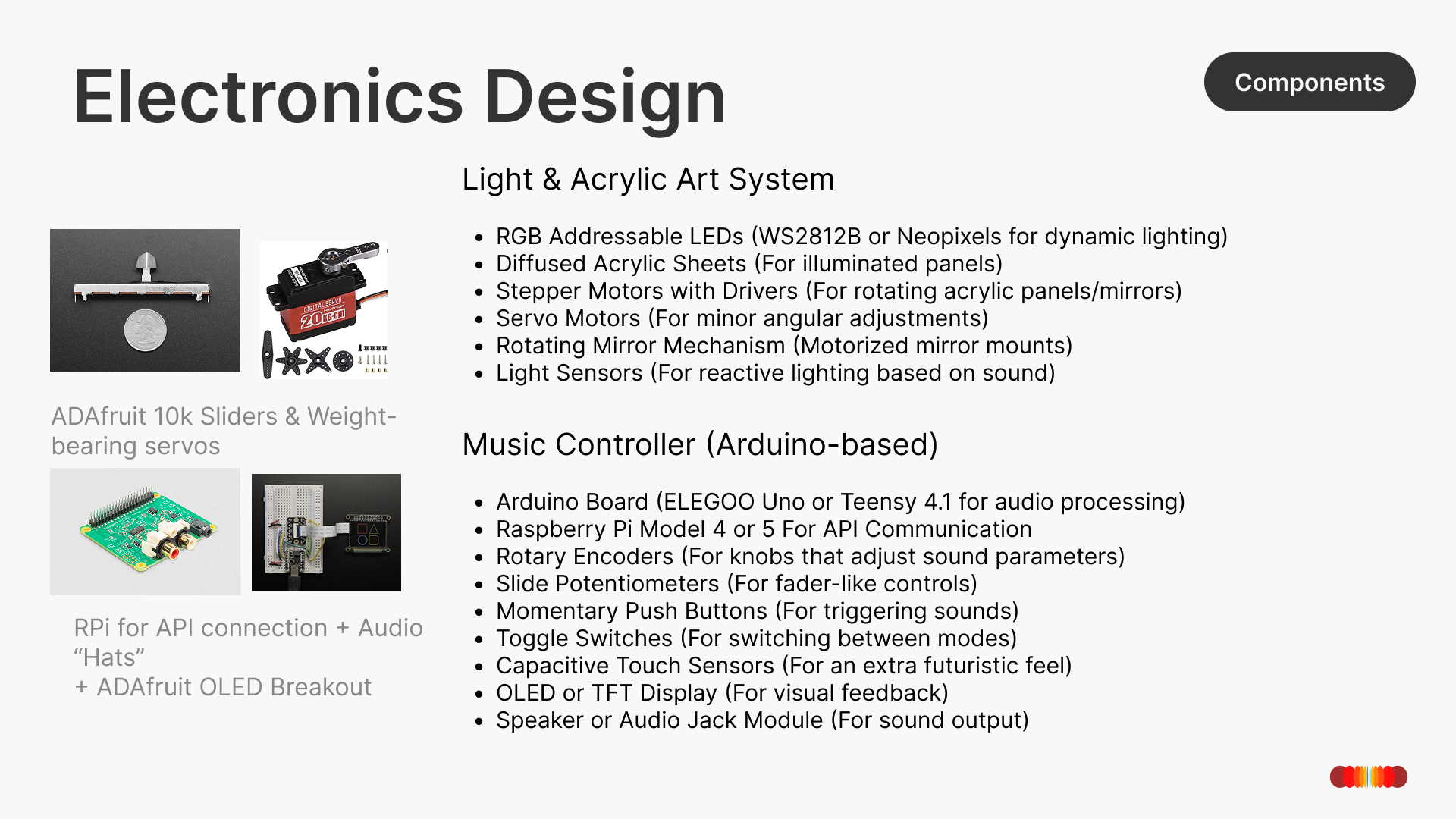

The technical implementation of PRISMATIC involved integrating various electronic components and developing custom software to create a responsive and reliable system.

Electronics components used in the PRISMATIC system

Development Challenges

- Latency Reduction: Minimizing delay between audio input and visual response

- Power Management: Balancing power requirements for LEDs, motors, and processing

- Wireless Reliability: Ensuring stable connectivity between controller and mobile

- Audio Analysis Accuracy: Developing algorithms that accurately identify musical features

- Physical Stability: Creating a balanced mobile that moves gracefully without tangling

Reflections

PRISMATIC challenged our team to bridge the worlds of physical computing, IoT technology, and emotional design. The project taught us valuable lessons about creating systems that respond to human experiences in meaningful ways.

Key Learnings

- The importance of latency reduction in creating systems that feel responsive to music in real-time

- How to translate abstract concepts like musical emotion into concrete design parameters like color and movement

- Techniques for balancing technical complexity with reliability in interactive installations

- The value of creating experiences that encourage social interaction and shared appreciation

- How physical computing can create more embodied, immersive experiences than screen-based interfaces alone